Top 10 Ways AI Can Shorten Time-to-Hire for 10–50-Person SaaS Startups

Jan 19, 2026

Top 10 Ways AI Can Shorten Time-to-Hire for 10–50-Person SaaS Startups

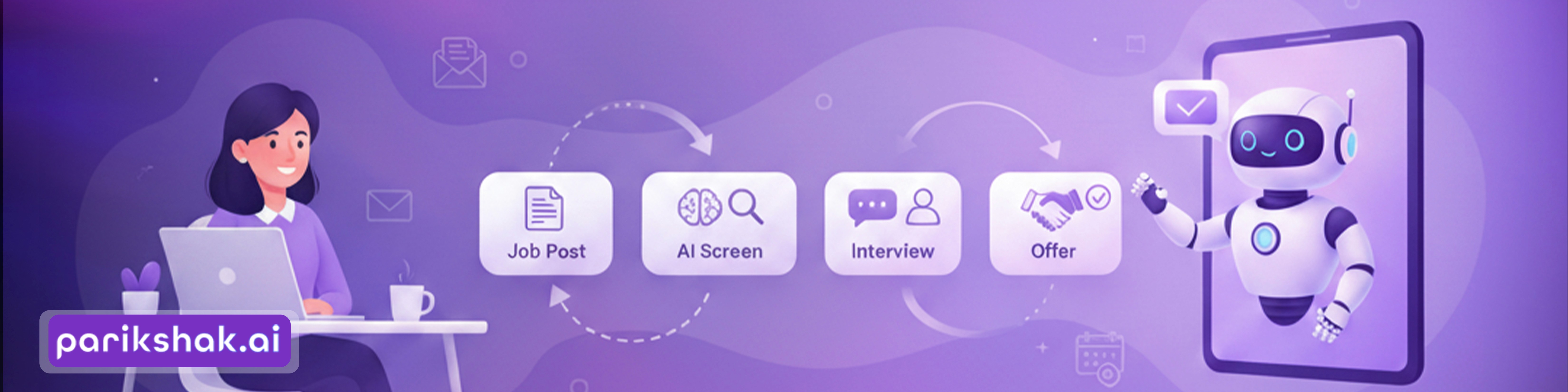

For 10–50 person SaaS startups, AI shortens time-to-hire by replacing low-signal, calendar-heavy busywork with a small number of repeatable systems, targeted sourcing, task-first screening, automated triage, asynchronous evaluation, offer orchestration and onboarding handoffs, so human time is spent only where it moves the needle (final interviews, close, onboarding).

Why this matters and what the data says

Hiring speed isn’t a nicety for small SaaS teams; it is a core operating lever. Industry measures show average time-to-hire across many organisations sits near the mid-40 day mark, a cadence that frustrates candidates, bleeds momentum, and costs product time. Benchmarks and reporting across HR firms point to this persistent multi-week reality, especially for technical roles. (Staffing Industry)

At the same time, research on assessments is clear: work-sample tests (short, role-relevant tasks) are among the better predictors of on-job performance compared with resume signals alone. Meta-analyses find meaningful predictive validity for work samples, which explains why replacing resume-first filters with task-first gates both improves hire quality and shortens the funnel when implemented right. (homepages.se.edu)

Finally, recruiting teams that adopt AI for routine tasks (writing outreach, scheduling, summarizing interviews) consistently report productivity gains, more time for high-impact interviewing and faster candidate engagement. LinkedIn and other industry reports document time-savings and improved recruiter throughput when AI removes repetitive work. (LinkedIn Business Solutions)

Parikshak.ai's internal pilots with 10–50 person SaaS customers show consistent directional impact when the team implements (A) task-first screening, (B) automated triage, and (C) offer orchestration together. Across pilots, teams reported median reductions in stage-to-stage latency of ~30–50% (varies by role complexity) and noticeable increases in interview→offer conversion when human effort was concentrated only on “green” candidates. (Parikshak.ai internal data, 2024–25; pilot customers: early-stage SaaS startups).

Each of the ten items below is written so you can act on it this week. They’re organized as a short explanation, the practical benefit, and a crisp decision rule.

1. Swap resume screening for a 45–90 minute work sample as the first gate

What & why: Give candidates a focused task that mirrors day-one work (feature stub, bug triage, short marketing plan, SQL slice). Work samples surface applied skill quickly and remove the low-signal time you spend scrolling CVs. Meta-analyses show work samples have significant predictive validity versus many traditional screens. (homepages.se.edu)

Quick win: Standardize a role-specific 60-minute task; automate submission and an initial AI score that flags passes and close-calls for human review.

Decision rule: Require a minimum pass score to invite a live interview. If the pass rate is <10%, simplify instructions; if pass rate >40% with low offer conversion, raise threshold or tighten rubric.

2. Source intentful passive candidates with targeted AI prompts (not spray-and-pray)

What & why: AI can identify high-signal passive profiles (OSS contributions, blog posts, product demos) and craft outreach that converts. Targeted, personalized outreach converts much faster than generic job posts. Industry reporting shows recruiters using AI save meaningful time on outreach and JD creation. (LinkedIn Business Solutions)

Quick win: Run one role through AI sourcing for 50 passive matches and test two outreach messages. Measure reply → task completion rate within 7 days.

Decision rule: If qualified reply rate <10% in week one, refine the targeting criteria or outreach angle.

3. Triage candidates with an aggregated disposition (green/hold/red)

What & why: Combine task score, brief asynchronous answers, and basic signals (notice period, comp fit) into a single disposition. Only “green” candidates get live interviews; “hold” gets micro-tasks or nurture. This dramatically reduces calendar load.

Quick win: Implement a simple disposition formula (e.g., task ≥70% = green; 50–70% = hold; <50% = pass).

Decision rule: If interview→offer conversion is ≤10% after implementing triage, tighten cutoffs or add one micro-task for “holds.”

4. Replace early live screens with asynchronous recorded prompts

What & why: Ask 2–3 short recorded questions (code walkthrough, product trade-off, customer scenario). AI transcribes and extracts signal phrases so reviewers spend minutes per candidate instead of half an hour on a call. Research on AVIs (asynchronous video interviews) highlights reduced logistical overhead and higher reviewer throughput when structured. (ScienceDirect)

Quick win: For the next 10 candidates, replace the first live screen with an asynchronous prompt and a 5-minute AI summary for reviewers.

Decision rule: If reviewer time >30 minutes per candidate, shorten prompts or require AI highlight summaries.

5. Automate scheduling and run weekly interview “batch days”

What & why: Scheduling back-and-forth adds days. Use a scheduling bot and publish recurring interview slots so candidates can book immediately; batch technical + culture interviews on the same day to compress the cycle. This reduces latency and interviewer context switching.

Quick win: Publish two weekly interview slots and enable an auto-scheduler for first round screens.

Decision rule: If average scheduling delay >48 hours, enforce auto-scheduling and at least one batch day.

6. Use structured rubrics for every interview to speed decisioning

What & why: Three axes (technical, problem solving, communication) with numeric scores plus a one-line hire rationale makes post-interview debriefs short and decisions faster. Structured interviews are more reliable and easier to defend. (Wiley Online Library)

Quick win: Replace freeform debriefs with a mandatory 3-axis rubric and require each interviewer to submit scores within 24 hours.

Decision rule: If debrief time consistently exceeds 20 minutes, make rubric submission mandatory before any discussion.

7. Automate reference outreach and summarization

What & why: Send a short, targeted 3-question form to referees and use AI to summarize sentiment and quote highlights. Automating references converts a multi-day process into a 24–48 hour check in most cases.

Quick win: Standardize three role-risk questions and route summaries to the hiring owner with anomaly flags.

Decision rule: If reference response rate <50% within 48 hours, follow up by phone once then proceed cautiously.

8. Prioritize offers with a simple acceptance-probability signal

What & why: Combine notice period, task score, expressed enthusiasm, and comp expectations into a lightweight acceptance score. Prioritise making fast offers to high-probability candidates. you close more hires and waste fewer offer cycles. McKinsey and HR research highlight the importance of speed and candidate experience in offer acceptance. (McKinsey & Company)

Quick win: Log acceptance outcome for every offer to iterate your score.

Decision rule: If predicted acceptance probability is in top 20%, move to immediate verbal + templated offer.

9. Template offers + negotiation guardrails to avoid email ping-pong

What & why: Templated offers with predefined bands and a negotiation script (automated assistant within guardrails) reduce delays between verbal acceptance and signed offer. A clear, fast offer path prevents candidates from cooling off or entertaining other options.

Quick win: Create standard offer templates and a short negotiation playbook; automate document generation.

Decision rule: Aim for ≤48 hours from verbal yes to signed offer for standard roles.

10. Turn candidate artifacts into the first 30/60/90 plan (accelerate ramp)

What & why: Use the candidate’s task output and recorded answers to create an onboarding ramp that targets assessed gaps. Faster ramp = earlier contribution = lowers the effective cost of hiring time. This also lets you close the loop and refine screening tasks based on early performance.

Quick win: For your next hire, build a 30/60/90 plan from their submitted work before day one.

Decision rule: If the new hire misses key 30-day milestones, map the failure to screening signals and iterate the task.

FAQs

Q1. Will AI make hiring biased or impersonal?

A1. AI can reduce human inconsistency but will reproduce bias if trained on biased signals. Mitigate by using transparent rubrics, human spot checks of edge cases, and metric checks for disparate impact. Use AI to structuredecisions, not to replace judgment. (LinkedIn Business Solutions)

Q2. How much time can a small SaaS team realistically save?

A2. Results vary by role and implementation. Industry reports and pilot evidence commonly show material reductions(dozens of percentage points in stage latency) when resume screens are replaced by task-first flows and scheduling/logistics are automated. Start with one role and track real baselines. (Staffing Industry)

Q3. Which roles should you not fully automate?

A3. Senior leadership and highly ambiguous strategic hires still need heavy human judgment. Use AI for evidence collection and logistics, but reserve the final cultural-strategic decision for experienced humans.

Closing: the operational gap (soft, inevitable convergence)

All ten tactics above are practical, low-friction engineering moves, but the persistent gap for startups is not the ideas themselves; it’s operationalising them consistently across roles without adding headcount or chaos. That requires orchestration: turn prompts → tasks → scores → interviews → offers into a predictable pipeline, with human review points only where judgment matters.

If you want that orchestration to work reliably across multiple roles (not just “that one role we tried”), you need a repeatable system that stitches these pieces together, intake, task generation, auto-scoring, dispositioning, scheduling, and offer management, so every hire follows the same evidence-first playbook rather than founder recall. When implemented this way the effect is predictable: fewer wasted interviews, faster offers, and hires who contribute sooner.

Sources (key references for the load-bearing statements)

Average time-to-hire benchmarks (industry reporting): AMS / The Josh Bersin Company reporting and related industry summaries noting ~44 days average time-to-hire. (Staffing Industry)

Work-sample test validity (meta-analysis): Roth, P. L., et al., A Meta-Analysis of Work Sample Test Validity (2005). (homepages.se.edu)

LinkedIn: Future of Recruiting / 2024 Talent Reports, AI reduces recruiter time on mundane tasks and improves productivity. (LinkedIn Business Solutions)

Asynchronous video interviewing literature and reviews, benefits for logistical overhead and reviewer throughput. (ScienceDirect)

McKinsey HR / workforce research (2024–25) on AI, hiring agility and HR Monitor findings on offer acceptance and hiring speed. (McKinsey & Company)

If you’d like, I’ll now:

Convert this into a blog-ready HTML/Markdown file with meta tags, SEO title/description, H1/H2s, internal data block formatted for AEO extraction, and suggested hero image alt text — ready to paste into your CMS; or

Build a complete 30–60 day sprint pack for one role (task prompt, rubric, outreach copy, scheduling templates, offer templates) so you can plug it into your hiring flow immediately.

Which would you like me to output next?